Container Management Tools and Network Design

The Painful Process of Learning

Monday 23rd June 2025

Now when I have an underlying compute infrastructure, it’s time to add some services. However, there are a few considerations:

What tool should I use? There is Docker, Podman and Kubernetes available.

And how can I segment the network?

The tools must fulfill some criteria:

It has to support IPv6, preferably being able to run IPv6 only.

It has to support high-availability, at least 2 node redundancy.

I need to set it up in a relatively short time-frame.

Container Management tools available

Docker

First of all there is docker, which I have most experience with (check my docker posts here if you want).

Benefits of docker:

Well-known, at least to me.

Not so steep learning curve, especially since I already have experience with it.

Supports Docker Swarm, which is a simple solution to achieve high-availability

Drawbacks:

It has some IPv6 limitations but I have managed to work with most of them. There was a serious problem where you couldn’t specify a default IPv6 DNS server for containers, but that seems to have been silently resolved now.

However, I had a devastating realization that Docker Swarms does not support IPv6! I have updated my post about docker swarm networks. I realized that while you can get an IPv6 address on your container, port forwarding won’t work.

Podman

Podman is an interesting alternative. I have so far written only one article about podman where I compare it to Docker.

Benefits of Podman:

Even though Podman may not be as well-known as Docker, It is very easy to adapt to. You basically substitute all “docker” commands with “podman”.

Podman is even compatible with docker compose

It is allegedly more secure than docker (by default). Probably because of the option of running Rootless or Rootful containers (see the link above). Although I’m not a security expert so I can’t say how big of a deal this is.

Drawbacks of Podman:

It does not have an equivalent to Docker Swarm. Podman seems to only run on a single node.

Podman has some IPv6 caveats of it’s own but I’m sure you can work with them.

Kubernetes (k8s)

I have just started to research on how Kubernetes works so everything here might not be factually correct. First I thought Kubernetes worked in conjunction with docker and podman, but it runs fine without them installed. Apparently, Kubernetes does both management and orchestration standalone very well.

So why bother consider docker or podman? k8s is extremely complex! While it may make sense in a Google datacenter, the time to learn it and set it up for a small business is, in my opinion, a huge undertaking. Sure, if you know how to do it, go for it. But if you are new to containerization like me, it’s months away.

There are however solutions like microk8s from Canonical that drastically reduces the deployment complexity of a Kubernetes cluster. However, in a small environment, docker swarm is still more convenient:

There are two types of hosts when creating a cluster:

Controller/Manager Nodes - Managing the worker nodes

Worker Nodes - Managing the services/containers.

Kubernetes requires a separate controller node, making a High-Availability setup require at least 3 nodes.

Docker Swarm can run controller and worker roles on the same node, but still needs an odd number of managers1 to be fault tolerant.

But how about the IPv6 support? Based on what I have read so far, it seems like Kubernetes may not support IPv6 flawlessly either, but you can probably work with it. At least dual-stack seems to be supported on microk8s.

Conclusion

Based on the above comparisons so far; If docker swarm had supported IPv6, that would have been favorable. However, making compromises on IPv6 support is non-negotiable so Kubernetes seems to be the only valid option, but that seems like a huge project at the moment.

I’ll probably start with a docker single node with a backup solution, then learn kubernetes and microk8s on the sideline.

Network Segmentation

But there is another dilemma: What is the most efficient way to prevent container applications from talking to each other?

Since I didn’t know beforehand what works and what doesn’t work, I just had to design a network that made sense to me and then find out if it will work or not.

What doesn’t work

Docker and VRFs

My initial thought was to run containers on different VRFs. See the picture:

Explanation:

My datacenter services are categorized into three macro-segments:

INT = Internal Services

PUB = Public Services

NMS = Network and Management Services

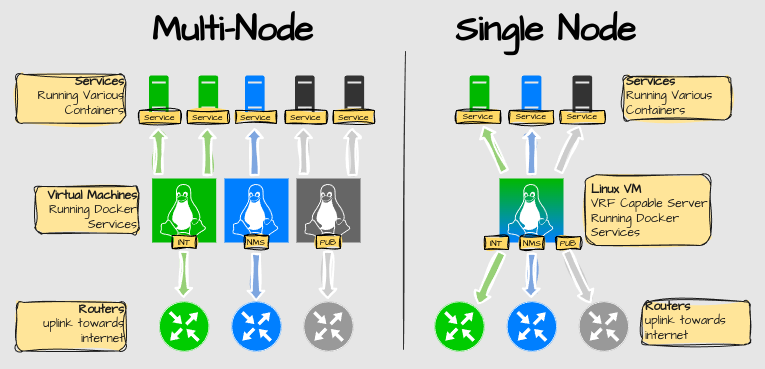

On the left, a simple configuration would be to create a VM per macro segment. No separate routing tables are needed. The downside is that I need to create at least 6 nodes for a high-availability design.

On the right, a more complex solution with a Linux server with VRF support. Each macro segment has it’s own VRF. Only 2 nodes are required for HA.

The problem is that no container management platform seems to support VRFs.

Containerized Router with Bridge interfaces

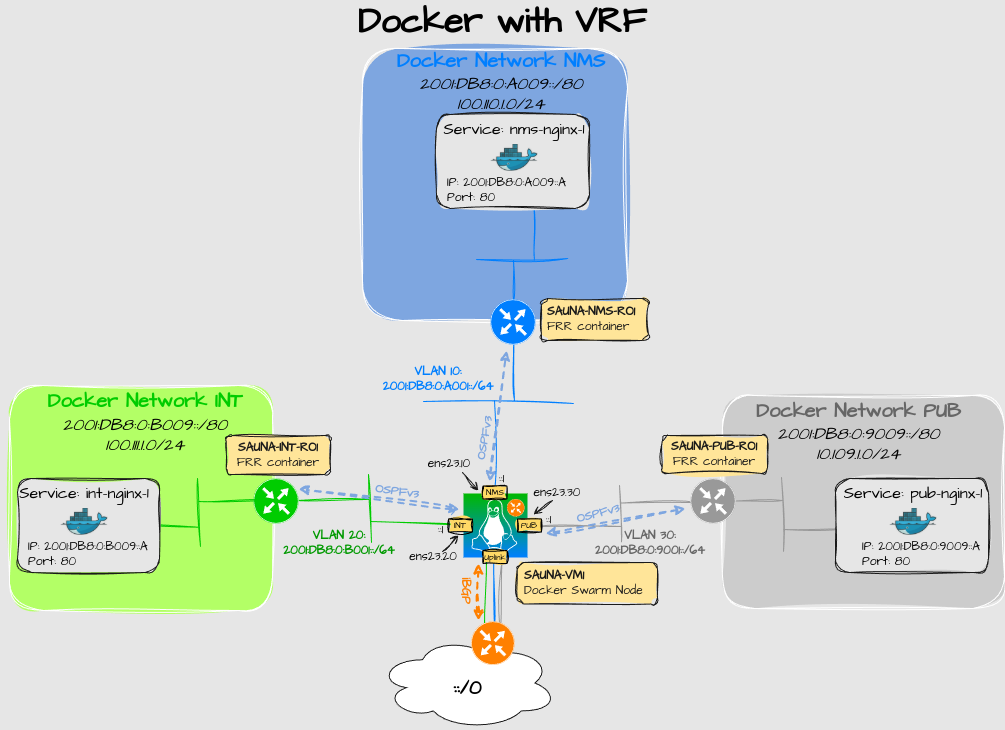

I tried one experiment to work around it. Instead of the host being the default gateway for the bridge networks, I was planning to let FRR containers do the routing:

Here I’m using the MACVLAN driver on the outside interfaces of the FRR containers to connect to subinterfaces on the host. Inside interfaces are regular bridges.

Unfortunately I hit a wall, and I have updated my docker article about reaching containers externally. Docker have included a new bridge option to turn off automatic default GW creation on bridge interfaces but for IPv4 only. Even if I could turn off GW creation on IPv6, there is still a limitation when creating a network; I can’t decide which containers should have a default gateway, and which shouldn’t. It’s either everyone, or nobody. I still have to use the IPVLAN_L2 Driver on the inside to make it work, as I have demonstrated in Containerized Router with VyOS.

Using IPVLAN_L2 Driver

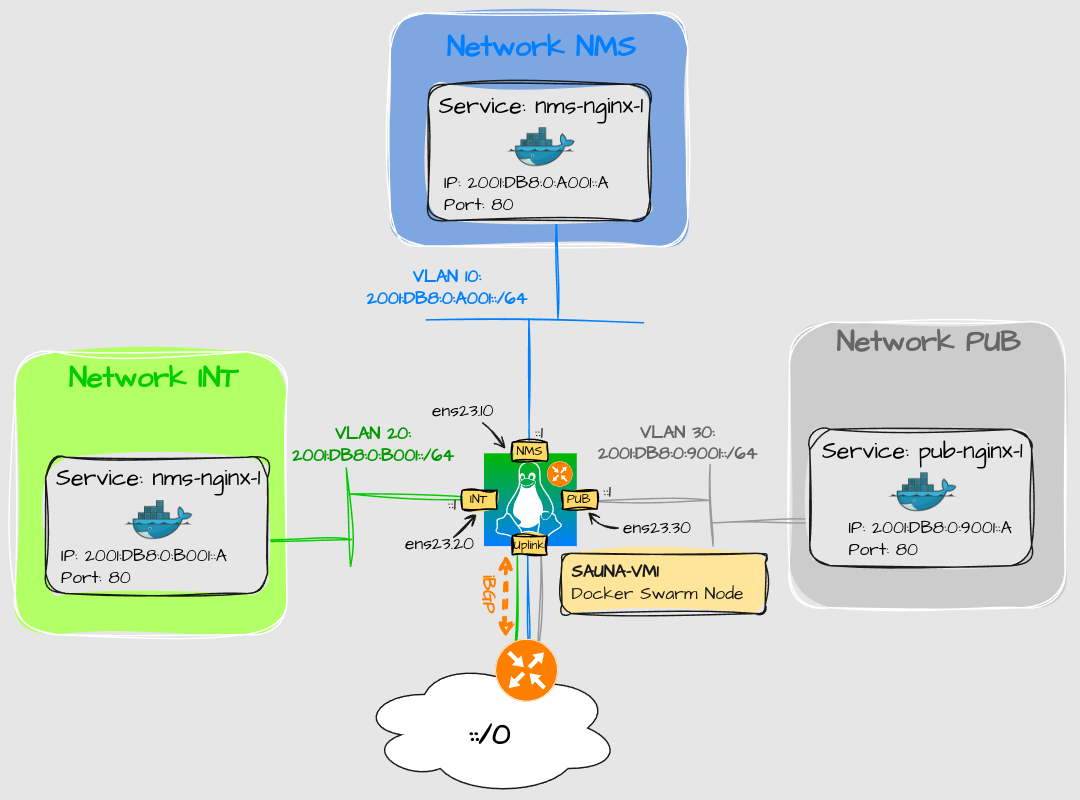

If I have to use the IPVLAN_L2 Driver, there is no need for a router container in the first place, or so I thought. My next experiment was to only use IPVLAN_L2 Driver:

Each VLAN subinterface is a member of their respective VRF. Unfortunately, this doesn’t work either.

I noticed this when reading up on IPVLAN mode:

“NOTE: the containers can NOT ping the underlying host interfaces as they are intentionally filtered by Linux for additional isolation.”

The same seems to be true for MACVLAN driver. I found out that it’s more than just ICMP traffic that is getting blocked; all traffic is denied to the Linux host.

My conclusion is that IPVLAN and MACVLAN can only be used when the gateway is external, like the routing scenario I built before.

What does work, sort of, but not really

The only option I have left, if I want to keep my current design, is to run FRR containers and use explicitly MACVLAN and IPVLAN drivers for the interfaces. But there are some drawbacks:

The first thing is the caveat of having to manually change the IP of the router interface after deployment, since you can’t assign the same IP address as the default GW.

The second thing is that it get’s harder to isolate applications when everything is in the same subnet. Docker iptables settings does not work with those drivers either. With bridge driver you can just create a bridge per application and then implement firewall rules between bridges.

Time for some reflection

At this point I’m thinking:

“Have I made this design to complex?”

“Do I really need to divide the services into three categories?”

“Can’t I just rely on the Linux firewall?”

I have accepted the fact: The Single-Node Multi-VRF solution is dead. I have to admit that I might have been thinking a bit old school. Todays datacenter relies heavily on SDN and microsegmentation. Perhaps I should consider one routing table with local firewall rules in place. If I need to scale up, I should also consider implementing EVPN in the future.

To my defense I still think that my initial design should be an option. If nothing else, it enables the freedom to lab and test different solutions. And also, why do you have to do the same design as everybody else? Right know it feels like it’s because nothing else is supported. Container networking is like a sandbox you thought was much bigger than it actually is.

Interesting read about why Kubernetes needs an odd number of managers:

https://www.siderolabs.com/blog/why-should-a-kubernetes-control-plane-be-three-nodes/

Here is an article from Dockers perspective:

https://docs.docker.com/engine/swarm/admin_guide/