Upgrading IT systems

A story of a risk taker

14th August, 2023

I come to realize one thing about myself: I am a risk taker. Not the adventure seeker kind, but more of the professional kind (arguably, upgrading an IT system is an adventure in itself sometimes). Over the years it have cost me plenty of things:

Money

Time

Sleep

Missed social events

Sanity

For better or worse, my wife is still with me.

Not to mention the poor customers where my recklessness have caused unnecessary downtime. But I have never failed so badly that there isn’t a valuable lesson to be learnt.

While being a risk taker has it downsides, I would never had learnt so much if I didn’t dare to try out new things and fail. At this point, I feel like I’m starting to become an expert on Murphy’s Law. So I thought about sharing some of my experiences here and what I have learnt so far.

The secret of IT-consulting

I’m going to let you in on a little secret first: The consultant you hired to install that bleeding edge server cluster, may seem very knowledgeable. Many times though, he might not have more experience about that system than you. The difference is that he (most likely a man since they are the bigger risk takers) are willing to risk his spare-time, health and reputation to do the job. There is a quote, I forgot where I read but it went something like this:

Should we mess things up ourselves, or should we hire a consultant to do it for us?

In the end though, after the fire is put out; the consultant will have gotten so much valuable knowledge out of the experience.

Personally I get bored if I have to do the same task more than 2 times; one time to learn, second time to verify that I actually know. So if you hire me to do something, you’re lucky if I have done it once!

Lessons Learned

The most risky tasks is when you are performing a hardware or a software upgrade. Keeping software and hardware up to date is important for security, performance and also to unlock new features. Many hesitate though to make changes to environment because they don’t know what the outcome will be. With Cisco ISE for example, not until version 2 started to go end of support, we started to get requests to help upgrade to version 3. And it was long jumps to; usually from 2.4, up to 2.7 and then 3.1 was necessary. Not that I had done that many 3.1 upgrades myself at the time, but I was there to take the blame if anything went wrong. It went surprisingly well most of the times.

Do not underestimate the complexity of an IT platform

Usually when an upgrade goes great, it is because you have never done it before. You are a humble beginner so you:

start reading up on the installation guides;

ask your colleagues;

include someone with more experience than yourself, in case shit hits the fan.

So it was for me when upgrading ISE 2.2 to 2.4 the first time. It went fine. What also went fine was upgrading from 2.4 to 2.7, and from 2.7 to 3.1. Now I’m starting to get confident so a jump from 3.1 to 3.2 should be a piece of cake right? I’ll get back to that.

Expect the unexpected

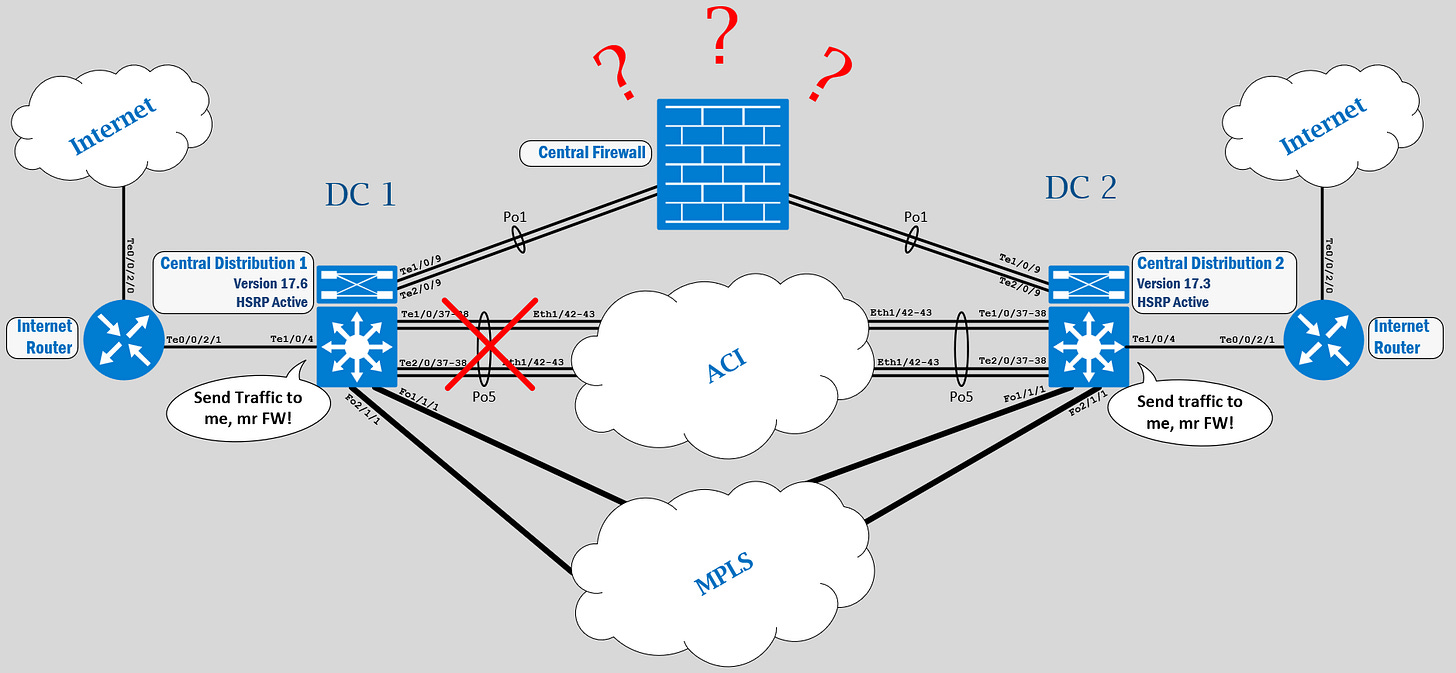

First another example where shit really hit the fan, and I missed the companies Christmas dinner. I was going to upgrade the software of x2 Cisco Catalyst 9500-40X switches from version 17.3 to 17.6 for a municipality. I had already done this upgrade on hundreds of similar switches, like the Catalyst 9300-48P and Catalyst 9500-48YC without a single problem. Plus, I was only upgrading one switch at the time, so even if something were to fail, there would still be one leg to stand on right?

At the time when the first switch booted up with new software, a complete network outage occurred. I remember being completely bewildered about what just happened. After many hours of troubleshooting, I stumbled upon a log message:

%Command rejected : MTU Config mismatch for interface Twe1/0/21 in group 5

It happens that Cisco update their configuration syntax from one release to the next. What happened here is that an aggregated port started to require that you configure MTU settings on the virtual interface, when in the previous version you only configured it on the physical ports. That caused the link to the ACI fabric to go down, which was transporting HSRP traffic back and forth between the central distribution pair, resulting in a split brain issue where both switches went active, causing the central firewall to be confused where to send traffic.

That made perfect sense and I wonder why I didn’t think of that earlier

It's so difficult, isn't it? To see what's going on when you're in the absolute middle of something? It's only with hindsight we can see things for what they are.

I don’t think I ever could have expected this to happen in advance so what could I have done different? Maybe I could have asked these questions:

“What are these switches actually doing? What if this link goes down? What will happen? What should I expect?”

Then maybe, if something similar was to happen again, I will solve whatever issue a lot faster. And also check the damn log.

Think twice, or thrice, when it comes to changes regarding layer 2.

I’m talking about the TCP/IP Model. When performing routing changes (layer 3), there is usually not too much impact. Of course, it depends how badly you mess up. Personally I can’t recall ever causing a devastating network outages because of a routing change. What I do have bad experience with is when you are performing changes on layer 2.

Designing redundancy on layer 2 is tricky business. You are strictly not allowed to have redundant links; because one MAC address must only be visible on one interface. There are protocols like spanning-tree that usually prevents redundant paths from wreaking havoc in your datacenter, but you are still able to have a bad day if you are not careful.

I remember a few years ago, when I was even more reckless than I am today, I was “just going to change” spanning tree mode from Rapid PVST to MST, to save resources in the datacenter. This was in the middle of the summer with no formal change window. I just said it won’t be any problems. As soon as I enter “spanning-tree mode mst ” in the terminal froze. At least I had the common sense of being inside the datacenter while performing the change. I quickly grabbed my console cable, with my heart beating out of my chest, and reverted the change. Obviously it was not just a little change. The damage wasn’t too bad; a complete network outage but it maybe lasted 30 seconds. But it was in the middle of the summer without a change window! In retrospect I can’t believe what I was thinking!

After planning a change window and reviewing the configuration one more time, It went perfectly fine.

Be skeptical of things you read on the Internet.

It’s true that you can never know beforehand how a software upgrade will turn out. There are so many variables like software, hardware and configuration settings. Every deployment is different. It might work fine the first time, the second and even the third time as well. But eventually you will get burned. This is the story of the Cisco ISE 3.2 upgrade that burned me.

I had recently upgraded ISE from 2.7 to 3.1 without any problem. I even wrote a guide on the topic. I followed the guide that I wrote haphazardly because the experience was still fresh in my memory. I did however only glance over one tiny detail, that I will mention later when it becomes relevant.

But regardless, I did all the other steps correctly; taking backup, disable PAN auto failover, purge operational data and so forth. When I ran the upgrade readiness tool however, it failed (due to CSCwe24589). That was a red flag for me. I started to ask the Internet for help and I found this thread.

It was a lot of negative feedback of ISE 3.2 issues after upgrading. One guy advised that you should instead do a re-imaging to get rid a lot of trash in the database that may have been building up from previous versions. It made sense to me. However it is claimed that when performing a major upgrade, most trash will be removed anyway. I have yet to verify if that is true but I would imagine if the underlying operating system changes, as you can see in Ciscos upgrade guide, there is probably a cleanup of the databases going on as well.

Even if the bug with the URT was only cosmetic, I decided to do a complete re-imaging instead of an upgrade. A chance to try something new and a fresh install of ISE with ultimate stability, that was my intent anyway.

The road to hell is built with good intentions

Upgrading ISE takes a long time. Usually I estimate around 12 hours for 8 nodes, with patching included. A re-imaging may take longer than that so I extended the change windows to 2 days. There is very little risk for downtime in a 8 node appliance cluster so you can do it on daytime (unless your PSN nodes are already overloaded).

The problems started right away with the CIMC KVM interface I used to transfer the installation file. The KVM kept disconnecting after 30min, breaking the installation. I found a setting inside the CIMC GUI where I could increase the the SSH and HTTPS timeout to 3 hours, but that didn’t affect the KVM HTML interface. A few hours delayed already. The reason it kept disconnecting was because I used an unsupported browser. You should apparently only use Firefox or Chrome when using the KVM interface. I have yet to find where Cisco have documented that, but I can attest that Firefox works.

Read the F-ing Manual

Next attempt was to use a USB flash drive since the KVM interface was way to slow. It went significantly faster, even if I had to run back and forth to the Datacenter.

First the installation would not succeed. Apparently making a USB bootable media for ISE is not as straight forward as when you’re making for… let’s say an Ubuntu Machine. When the bootable media is finished, you have to edit 3 files so that the ISE appliance knows it’s a USB drive, and not a CDROM you are trying to install from. Once I did that, the installation went fine.

Then I remembered that I didn’t change the setting in Rufus from GPT to MBR! A big deal? GPT is the newer standard anyway. You would think the latest version of Redhat uses GPT. It was even mentioned in a blog that you should use GPT.

It seemed to work fine at first, but then the application server got stuck on initializing.

ise-pan02/admin#show application status ise

ISE PROCESS NAME STATE PROCESS ID

--------------------------------------------------------------------

Database Listener running 53068

Database Server running 123 PROCESSES

Application Server initializing So even if it got that far, I gave it the benefit of a doubt, since I didn’t do it correctly, and reinstalled with MBR partition table instead. Then it started fine. At this time I was already on my second day and I wasn’t done with the first node. Just because I failed to read the manual correctly. To be fair it was the first time I have ever performed a USB boot on a UCS appliance. Can’t expect it to go flawlessly.

Pride cometh before a fall

The problems seemed to never stop for me. After finally getting the first node up on 3.2, patching it and then importing the configuration, the system certificate could not be imported. The guy here expresses perfectly the state I was in at the time:

Guess what? That tiny detail I overlooked, from my own guide, was that I didn’t install the latest patch before exporting the configuration! So who is to blame here? Sure I would like Cisco to write better software so we didn’t have to patch every second month, but really I should have known better. Just because I got relaxed, thinking this would go just as smooth as all the other upgrades in the past.

By the time I discovered my mistake I was already rolling back to 3.1, using KVM with HTML on Firefox to transfer the image. It took 4 hours.

3 days and 3 evenings and I’m back at square 1.

Do it all over, do it right.

This subtitle was my programming teacher’s favorite phrase (gör om, gör rätt). I didn’t learn anything about programming though.

After rollback I decided to do a regular upgrade from the latest patch. I’m sure it would work with an re-image from patch 7 but I didn’t wanna go through all that again just to find out.

I didn’t know that ISE 3.1 had a built-in URT tool in the GUI now, called Health Check. I ran that and discovered some expired internal CA certificates. It seems like in 3.0, the PAN node has to run the CA services, at least internally, while in the older 2.x versions it was optional. I fixed those issues and now, after spending a weekend upgrading 8 nodes, the ISE cluster is working on 3.2.

Conclusion: If it ain’t broken, don’t fix it.

Performing a re-imaging of a 8-node cluster turns out to be more cumbersome than I expected and probably not worth the theoretical benefit. If you need to do something similar, dig deeper into Ciscos documentation. It’s dangerous to assume it works in one way, just because you have done something similar in the past. If you can’t find what you are looking for, there is no harm in contacting a Cisco representative and ask: “Is this procedure supported?” It might save you some time.

Pet the cat

This advice is taken from Jordan Petersons book “12 rules for life - an antidote for chaos”. We all make mistakes along the way. After a bad day, or a week. It’s important to appreciate the small things in life. Pet the cat. When it starts purring, put your ear against it’s tummy and listen. It is very soothing.

The End.

Special Mention

I want to thank the long-time customer that have dealt with my endeavors for quite some time now. I have learnt a lot there, if you know what I mean. Anyway, thanks for all the patience you have.