May 13th 2024.

Introduction

When I decided to convert my gaming PC and into a server, I needed more network adapters. I wanted to make sure that they support most features that I could possibly have use for. That i why I went for Mellanox ConnectX.

The latest Mellanox ConnectX-6 is quite expensive, around $1500. Luckily for me I found 2 used ConnectX-3 for like $70 each.

I wanted to start configuring it right away but only a few minutes in I discovered a rabbit hole of features that I have not heard about before. I realized that I need to perform a little research first.

Mellanox ConnectX are "SmartNICs", which means that they can perform functions like offloading the CPU and other features like SR-IOV, Infiniband and RoCEv2. The confusion starts right away; what is this? do I need it?

Mellanox ConnectX 3 Features

Here are some of the features briefly explained.

Infiniband

The Mellanox ConnectX 3 supports 2 modes: Infiniband or Ethernet. I'm familiar with good ol' ethernet so i assume that Infiniband is a new type of layer 2 connectivity protocol.

Reading about Infiniband, I learnt that it is increasingly being used in High-Performance Computing clusters (HPC) to interconnect machines to work more efficiently together. SRP = SCSCI RDMA Protocol would be a good use-case. I don't have any other machine right now that supports Infiniband. You also need an infiniband switch, so it can get quite expensive. Therefore I stick with Ethernet mode.

Sources:

https://www.reddit.com/r/homelab/comments/7efvij/use_cases_for_infiniband/

https://docs.nvidia.com/networking/display/mlnxofedv451010/srp+-+scsi+rdma+protocol

RoCEv2 = RDMA over Converged Ethernet version 2

RDMA = Remote Direct Memory Access is the feature that offloads the CPU. It basically does memory-to-memory communication without involving the CPU or the OS.

Note: Infiniband is also based on RDMA.

RoCE is running over UDP/IPv4 or UDP/IPv6. The 2nd version supports routing and is the most popular version of RDMA today.

iWARP = Internet Wide Area RDMA Protocol

iWARP runs over TCP or STCP (Stream Transmission Control Protocol).

With iWARP, applications on a server can read or write directly to applications running on another server without requiring OS support on either server.

Sources:

https://www.techtarget.com/searchstorage/definition/Remote-Direct-Memory-Access

https://www.snia.org/sites/default/files/ESF/RoCE-vs.-iWARP-Final.pdf

IOV = Input/Output Virtualization

From Techtarget:

“I/O virtualization (IOV), or input/output virtualization, is technology that uses software to abstract upper-layer protocols from physical connections or physical transports. This technique takes a single physical component and presents it to devices as multiple components.”

Briefly explained, it is possible to take one physical NIC (or any PCIe hardware that supports IOV) and present it as multiple virtual NICs. It is similar in how you can create multiple partitions from a single harddrive. That is a very useful feature for datacenters with resource pools and multiple tenants!

There are two versions:

SR-IOV = Single Root IOV carves a hardware component into multiple logical partitions that can simultaneously share access to a PCIe device.

MR-IOV = Multi Root IOV devices reside externally from the host and are shared across multiple hardware domains. Imagine your graphic card not being connected directly to the motherboard of your computer, but somewhere else on the network.

I actually bought two NICs. I was going to use one for storage and the other for VM traffic, but now I realize I only need one of them, if I set up SR-IOV correctly.

Source: https://www.techtarget.com/searchstorage/definition/I-O-virtualization-IOV

Conclusion

Infiniband, iWARP and RoCE are all RDMA-based features that are best utilized for datacenter traffic. In other words; it is best suited for server-to-server communication, not so much for client-to-server communication.

SR-IOV is used to create multiple virtual NICs out of one physical NIC. IOV is not exclusive for NICs, but can be used for all types of PCIe hardware.

Mellanox ConnectX-3 in action

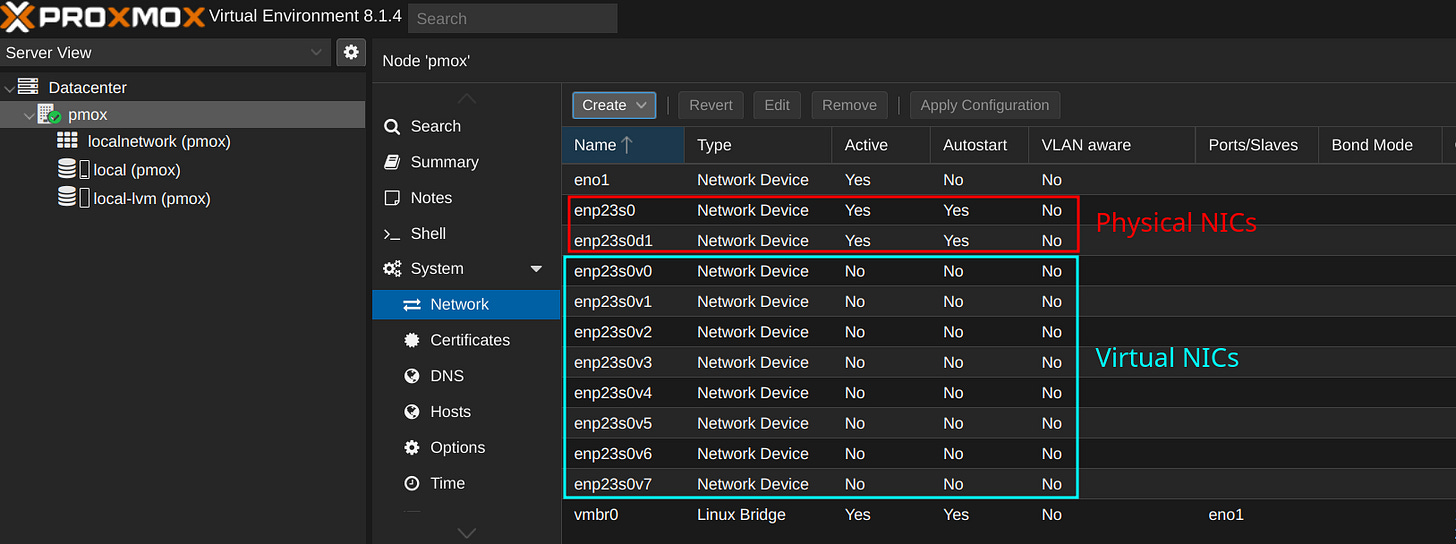

Here is an example how Mellanox ConnectX-3 interface adapters looks like in Proxmox VE when SR-IOV is activated:

Next post will describe how to install and configure a Mellanox ConnectX-3 for SR-IOV.